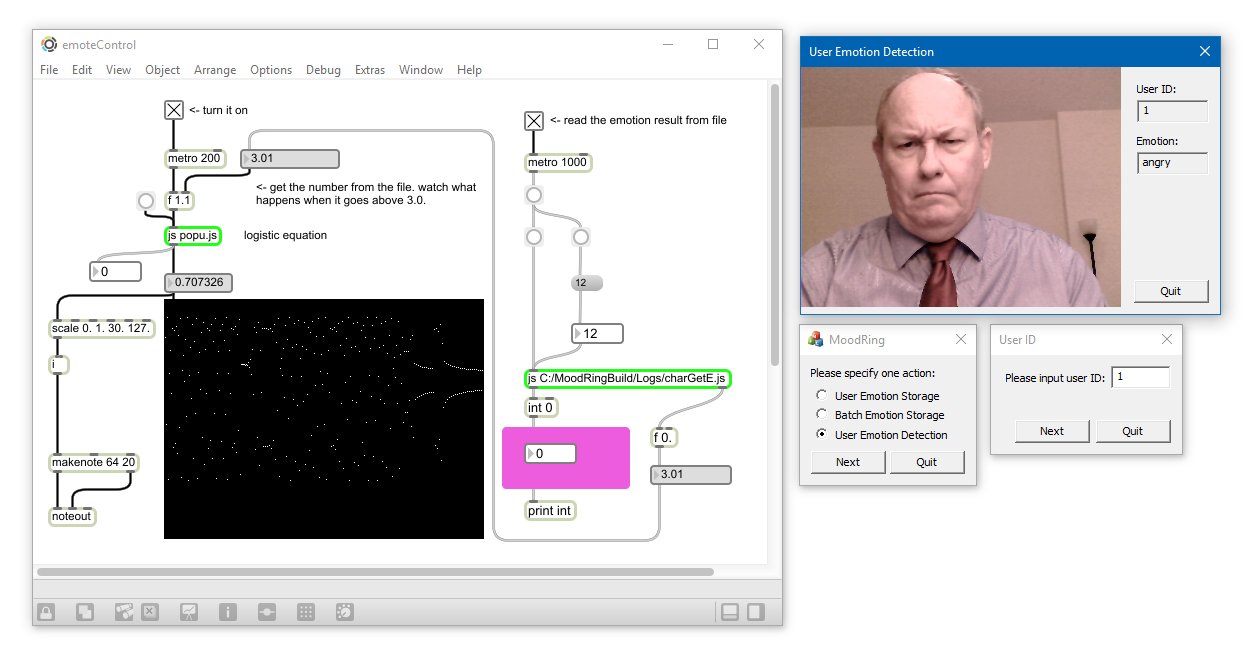

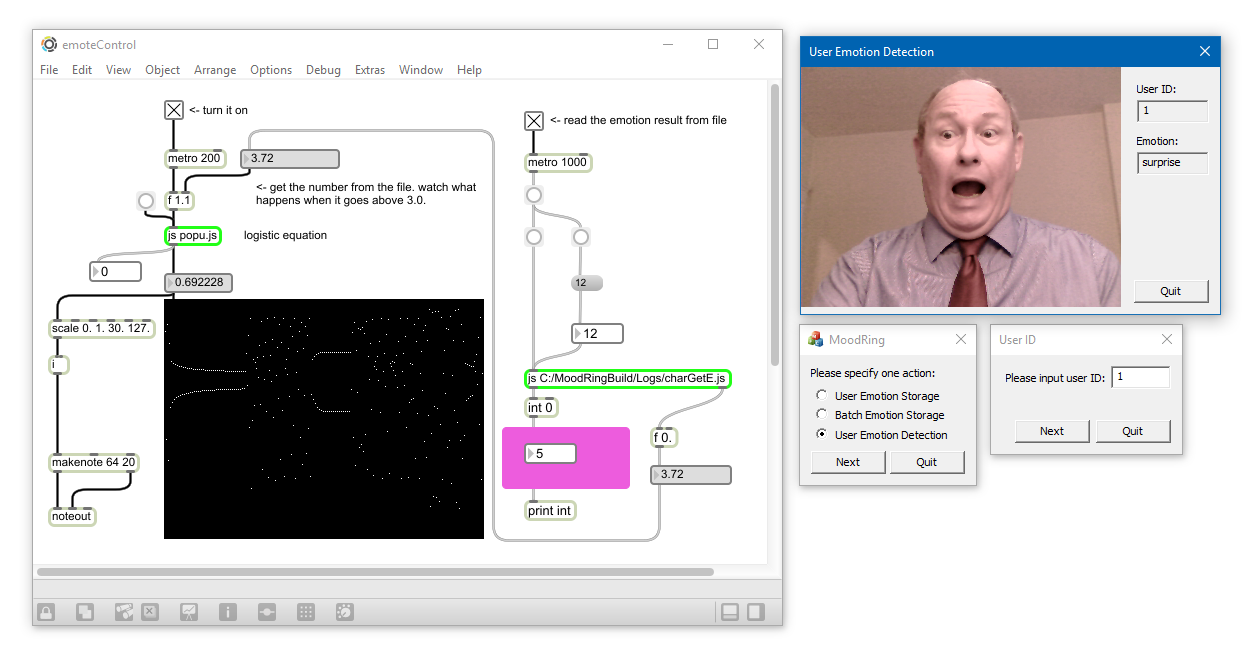

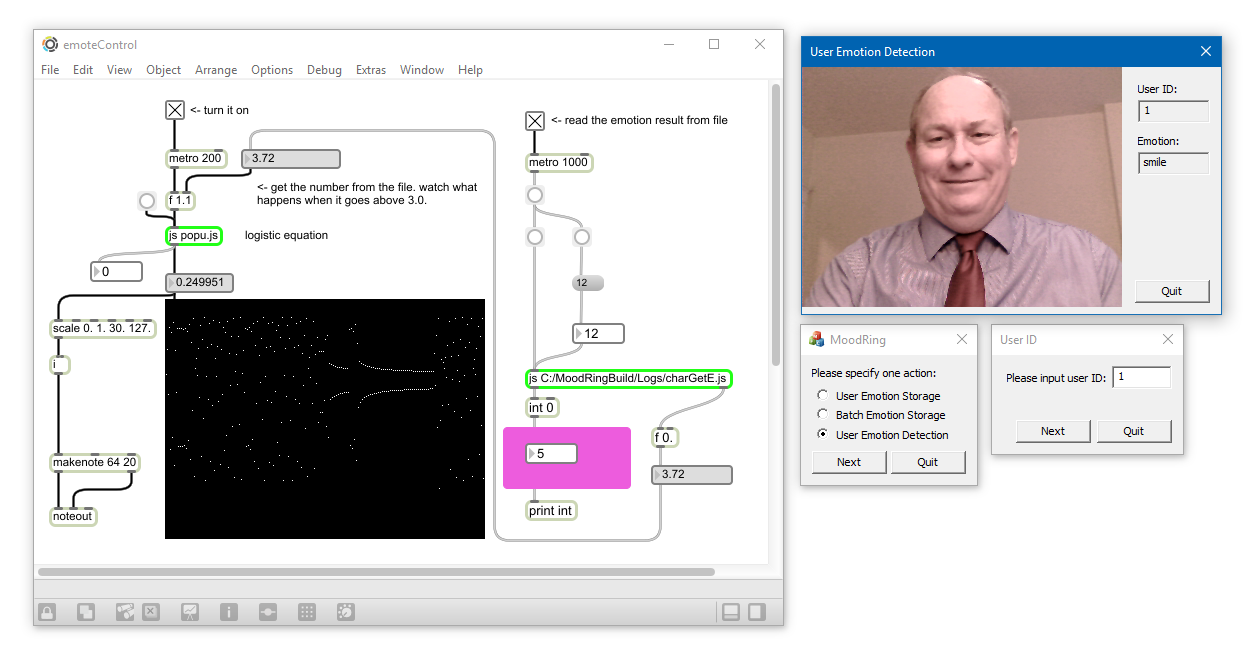

Emotion Detection

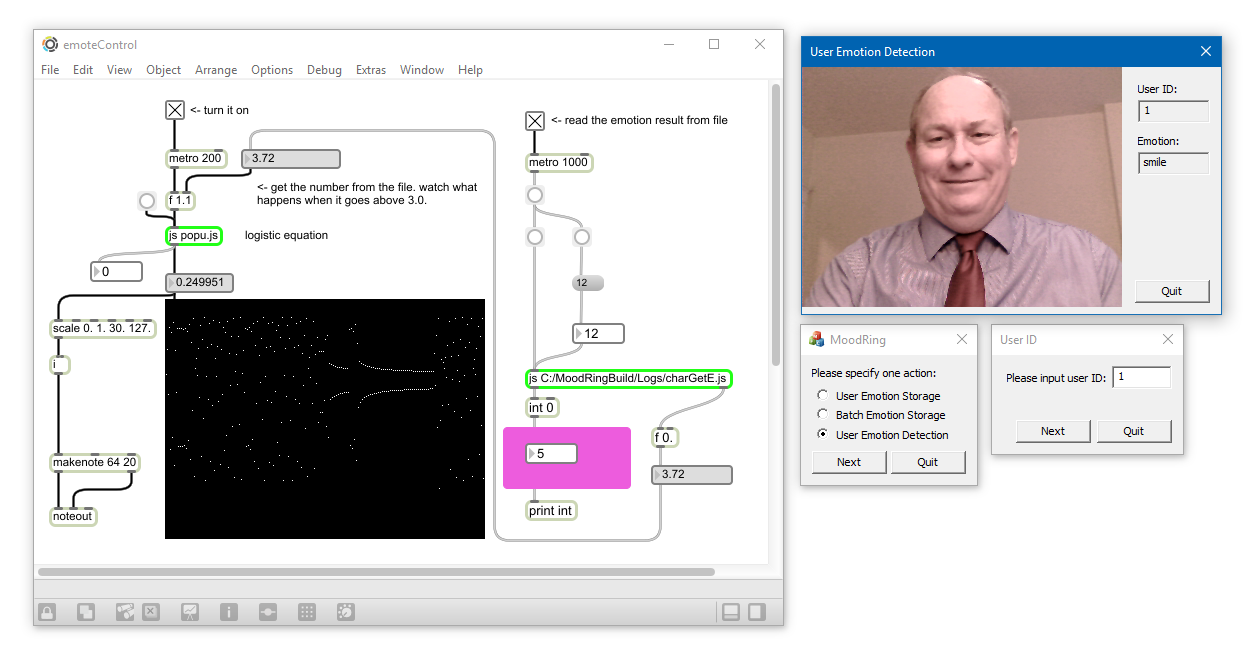

The image above is a screen capture of “Emota™ v3.0” applied to a control of musical composition. Other applications are the control of environmental or media content in public spaces, sculptural objects or in social media applications.

Adopting a set of pre-trained Haar Classifiers[1] to locate only one pair of eyes and mouth[2] [3]. For facial detection, only eyes and mouth are used. If multiple rectangles are found for the same part, we run a clustering method to esti-mate the target rectangle based on the Euclidean distance. We run a clustering algo-rithm based on Euclidean distance between locations of each possible candidate. Then, a set of anchor points can be delivers based on these rectangles. Before feature extraction, lumen normalization is adopted to detected facial part of the image such that light conditions have less effect to the feature extraction process. Numeric features are extracted through convolutions with a set of pre-calculated Ga-bor filters called Gabor Bank. Gabor filters are implemented to derive orientations of features in the captured image using pattern analysis, directionality distribution of the features. Using Gabor filters increases accuracy of the anchor points derived in the elastic bunch graph matching.

Operations of elastic bunching graph include graph matching, graph pruning, and add-ing sub-graphs from either an image or an xml file. Elastic bunching graphs applies convolutions of certain areas of images using all filters in the Gabor Bank. This results in a list of anchor information for all anchor points, where each anchor information contains a list of all convolution results corresponding to filters in the Gabor Bank. If the program is in a training mode, it will store the hierarchical results as an xml file. Otherwise, emotion detection is followed after feature extraction.

Graph pruning is the core function of Database Compressor. The pruning algorithm is basically a variety of DBSCAN [17], where the distance of sub-graphs is defined as sum of Euclidean distance of all convolution results of for all anchor points. If one cluster contains at least the minimum number of neighbor point sub-graphs, and dis-tances of these sub-graphs are at most eps, we combine all sub-graphs in one cluster into one. Thus, very similar sub-graphs are merged to reduce storage space and comparing time.

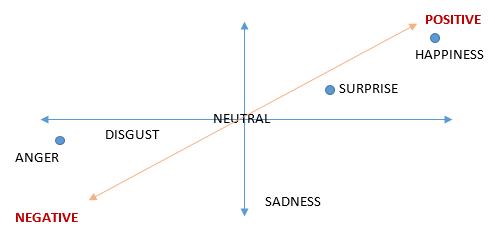

The emotion detection is a similarity comparing process. Target graph is compared with all sub-graphs in all seven emotions [9] in the FERET dataset. We categorize the target graph for the same emotion type as its most similar sub-graph. In comparison of two graphs, we can calculate a weighted average on the distance of all such convolution results of all anchors in graphs. When the program is initialized, a mathematical model determined by Weight Trainer is loaded, such that the weight of each anchor can be used to measure graph similarity.

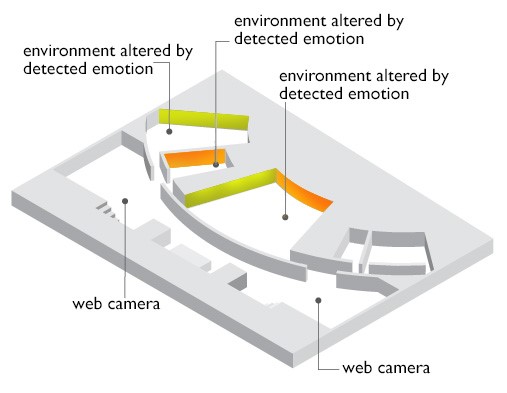

Considering historical examples, artists have explored the use of projected imagery or light works as a primary medium. These works may fall into one or more genre or may be in-between different genres of art. Looking at examples of installation, or environmental art works, the work of Dan Flavin [10] is exemplary in the use of light as a singular imaging medium. Flavin’s work, as he has described it, is created and experienced in a strict formalist approach. Formalism focuses on the way objects are made and their purely visual aspects. Nevertheless, the works, such as Flavin’s, though static light alter or inform audience spatial perception of spaces where they are installed. In our study of the use of interactive elements, can the viewer’s perception be altered by the shifting of color or imagery based on responses detected from the viewers themselves? Further, can we use the detection of subtle emotional cues to alter the qualities of the imagery or installation? More recently, the projection of video or animated imagery on building facades or in public spaces has become a common way to attract viewer engagement. In these types of new media art work experiences, such as the 2011 transformed façade of St. Patrick Cathedral and the New Museum in New York [12], these altered architectural and public spaces become a “canvas” where images and media content can be viewed outside of the special circumstance of the gallery or museum. Considering possible ways to allow for audience interaction, we know that sensors and vision systems are being used to encourage audience participation. Can subtle emotional cues be used as well?

Collaborators: Pensyl, W. R.; Song Shuli Lily; Dias, Walson; Huang, Walter Yucheng Walter; Odell, Conor; Zhang, Yifan Henry