Haptic Feedback in Mixed Reality

Augmented Reality (AR) developed over the past decade with many applications in entertainment, training, education, etc. Vision-based marker tracking of subject is a common technique used in AR applications over the years due to its low-cost and supporting freeware libraries like AR-Toolkit. Recent research tries to enable markerless tracking to get rid of the unnatural black/white fiducial markers which are attached a subject being tracked. Common markerless tracking techniques extract natural image features from the video image streams.

However, due to perspective foreshortening, visual clutter, occlusions, sparseness and lack of texture [1], feature detection approaches are inconsistent and unreliable in different scenarios. This project proposes a markerless tracking technique using image analysis/synthesis approach. Its task is to minimize the relative difference in image illumination between the synthesized and captured images. Through the use of a 3D geometric model and correct illumination synthesis, it promises more robust and stable results under different scenarios. However, speed and efficient initialization are its biggest concerns. The aim of our project is to investigate and produce robust real-time markerless tracking. The speed can be enhanced by reducing the number of iterations via different optimization techniques. Graphics Processors (GPU) will be exploited to accelerate the rendering and optimization by making use of parallel processing capabilities. The outcome of this project includes new reusable software modules for AR, VR, HCI applications.

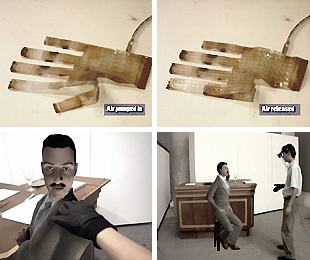

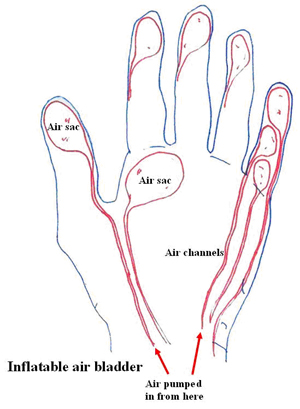

The haptic glove consists of an inflatable air bladder inserted between the linings (Figure 1). The air bladder is connected to a pneumatic system. When triggered, the pneumatic system quickly pusles air into the bladder and creates a sensation touch in the hand.

This work builds an interactive system which incorporates high fidelity haptic feedback to the user. We want the user to have a rich multimodal sensory experience in an interactive AR environment. Within this setup, the users can interact intuitively with the virtual avatars. Haptic interaction in AR is an interesting feature to have - imagine in a popular online virtual community environment, user can not only talk but also shake hand with the virtual avatar. One psychologist says that no other sense is more able to convince us of the reality of an object than does our sense of touch.

In this setup, user puts on a HMD which has two cameras mounted on it. He also puts on our own custom-made haptic glove. The user is allowed to freely walk around the designed area which has a bar table and a chair. Through his HMD, he is able to see a life-size, realistic virtual human avatar sitting in the chair.As the user approaches the virtual avatar and pats his shoulder, the avatar turns his head towards the user and greets him. In another scene, the avatar initiates interaction by holding out his hand. The user then extends his hand and interactively controls the avatar's virtual hand. Through haptic feedback, the user can feel thepressure of pushing on the avatar's hand. Two separate computer vision tracking techniques are used for two different purposes. First of all, tracking of the physical environment (in order to establish the frame of reference of the user's cameras with respect to the environment) uses Parallel Tracking and Mapping (PTAM) technique. With this the 3D virtual avatar can have a fixed frame of reference with respect to the world coordinate; and with robust tracking the avatar can be rendered in a stable manner. Secondly, tracking of the user's glove is achieved by using the two cameras and employing stereo matching technique. Color segmentation of the glove is first carried out, followed by matching of each and every single pixel of the silhouettes from both camera images. The depth information of the glove can then be obtained.

Research conceived and developed by Lee Shang Ping, directed by Russell Pensyl with Tran Cong Thien Qui and Li Hua. Developed in the Interaction and Entertainment Research Centre, Nanyang Technological University, Singapore.

- 1. Artoolkit http://www.hitl.washington .edu/artoolkit

- Caarls, J., Jonker, P.P., Persa, S. Sensor Fusionfor Augmented Reality. EUSAI 2003: 160-176

2. Hol, J.D. Schon, T.B., Gustafsson, F., Slycke, P.J. 2006. Sensor fusion for augmented reality. In Proceedings of 9th International Conference on Information Fusion.

3. Kato, H., Billinghurst, M. 1999. Marker tracking and HMD calibration for a video-based augmented reality conferencing system. In Proceedings of the 2nd International Workshop on Augmented Reality (IWAR 99). October, San Francisco, USA

4. Ksatria Gameworks. http://www.ksatria.com

5. Mxrtoolkit http://sourceforge.net/proj-ects/mxrtoolkit

6. Nguyen, T.H.D., Qui, T.C.T., Xu, K., Cheok, A.D., Teo, S.L., Zhou, Z.Y., Allawaarachchi, A., Lee, S.P., Liu, W., Teo, H.S., Thang, L.N., Li, Y., And Kato, H. 2005. Real Time 3D Human Capture System for Mixed-Reality Art and Entertainment, IEEE Transaction on Visualization and Computer Graphics (TVCG), 11, 6 (November-December 2005), 706 - 721.

7. Slabaugh, G., Schafer, R., Levingston, M. 2001. Optimal ray intersection for computing 3D points from Nview correspondences. SONG,

8. M., Elias, T., Martinovic, I., Muellerwittig, W., Chan, T.K.Y., 2004. Digital Heritage Application as an Edutainment Tool. In Proceedings of the 2004- ACM SIGGRAPH International Conference on Virtual Reality Continuum and its Applications in Industry

- 9. Sparacino, F., Wren, C., Davenport, G., Pentland, A. Augmented performance in dance and theater. In International Dance and Technology 99, ASU, Tempe, Arizona, February 1999.

10. Uematsu, Y., Saito, H. 2007. Improvement of accuracy for 2D marker-based tracking using particle filter. In Proceedings of 17th International Conference on Artificial Reality and Telexistence.

11. WII Remote, http://wiibrew.org/wiki/

Wii_Remote

12. WII Yourself, http://wiiyourself.gl.tter.org