An “On-the-Fly” Pseudo Model-based Augmented Reality

There have been very widespread applications of augmented reality, such as in education, entertainment and simulation etc. Most tabletop augmented reality applications use fiducial markers for tracking, and rely on accurate tracking and mapping of database of marker images. The problem with this marker-based approach is that loss of track-ing is unavoidably frequent, due to partially occluded marker, poor ambient lighting. Marker-based tracking is also undesirable due to the unaesthetic fiducial marker.

In advanced augmented reality the trend is to make use of useful feature on the subject for tracking purposes. Feature-based track-ing tracks 2D and 3D surfaces. Normally in this approach the 2D or 3D models of objects or scenes need to be created beforehand - this is a time-consuming process.

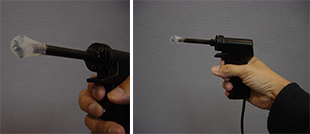

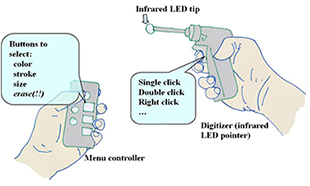

We have developed an "On-the-Fly" approach which allows developers to create models "on-the-fly" easily and quickly. It basically uses a pointing device which we call the 3D digitizer. It has an infra-red LED at the tip.

An improved version of the 3D digitizer allows users to perform more sophisticated tasks such as drawing 3D lines in different colors. A separate hardware menu controller allows users to choose a different thickness of stroke and colors by pressing its buttons. In addition to this the HMD lets the user see what he is drawing in the 3D space. The user holds the menu controller in one hand and the 3D digitizer pointer on the other.

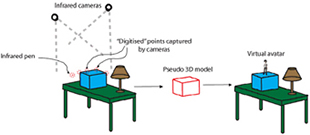

Overall setup and conception:

Two Wii Remote cameras are mounted on the wall/ceiling. The cameras are able to track the 3D digitizer. Another viewing camera is attached to user's head-mounted display. The user uses the 3D digitizer to point at surfaces of the object of interest, in order to map out the useful features on the object.

The Wii Remotes can track the 3D digitizer at very high rate. The 3D position of the digitizer can be found by triangulation. After this, the tracking algorithm kicks in and tracks these natural features. The tracking techniques is a combination of scale-invariant feature transform (SIFT) and parallel tracking and mapping (PTAM.)

2. http://artoolkit.source

forge.net/

3. L. Vacchetti, V. Lepetit and P. Fua. Stable Real-Time 3D Tracking Using Online and Offline Information. In IEEE Transactions on Pattern Analysis and Machine Intelligence, Vol. 26, Nr. 10, pp. 1385-1391, 2004.

4. V. Lepetit and P. Fua. Keypoint Recognition using Randomized Trees. In IEEE Transactions on Pattern Analysis and Machine Intelligence, Vol. 28, Nr. 9, pp. 1465-1479, 2006.

5. M. Ozuysal, P. Fua, V. Lepetit. Fast Keypoint Recognition in Ten Lines of Code. In Conference on Computer Vision and Pattern Recognition, Minneapolis, 2007.

6. Reitmayr, G. and Drummond, T.W. Going out: robust model-based tracking for outdoor augmented reality. In The IEEE/ACM International Symposium on Mixed and Augmented Reality, October 2006

7. G. Slabaugh, R. Schafer, and M. Livingston. Optimal Ray Intersection for Computing 3D Points from N-View Correspondences, October 2, 2001.

8. Georg Klein and David Murray. Parallel Tracking and Mapping for Small {AR} Workspaces. International Symposium on Mixed and Augmented Reality (ISMAR'07, Nara).