Red, Green, Wait 红绿灯 | Emota™ v4.0

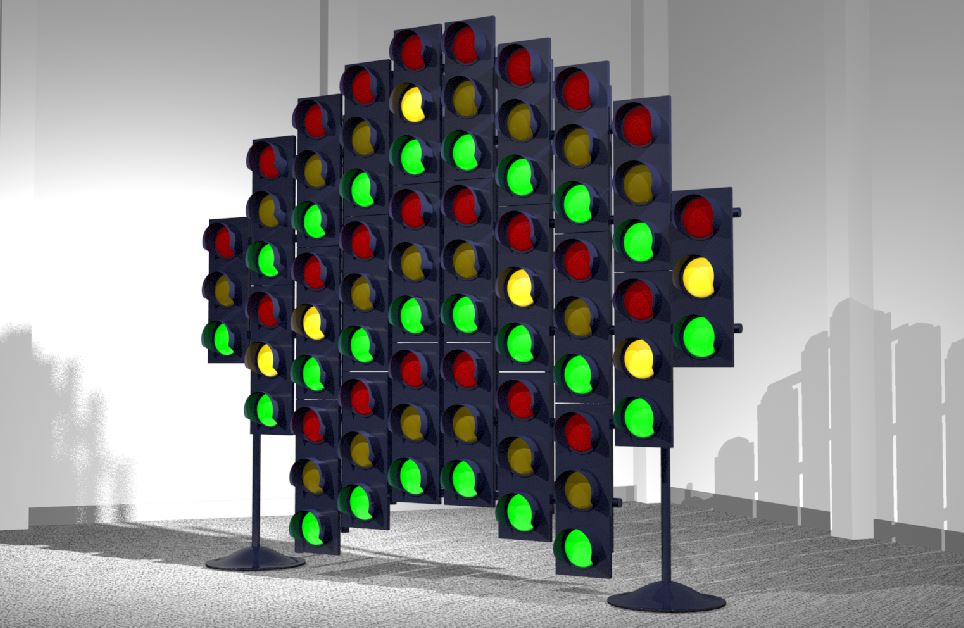

The image at the beginning of the video above is a preliminary design view of an installation that employs Emota™ v4.0. The video demonstrates the functioning traffic signal array with emotion detection. This iteration of Emota™ is written specifically to run on Raspberry Pi microcomputers. The installation uses 25 actual traffic signals controlled with a Rapsbery Pi3 microcontroller, a vision system, five 16-channel relay banks, 5 MCP23017 GPIO extender ICs. The lights pattern states are modified based on emotions detected in viewers in public space.

The this installation was accepted for display in the prestigious 14th Arte Laguna Prize exhibition in the Nappe Arsenale, Venice, Italy in 2021. Red, Green, Wait 红绿灯, one of 120 finalist artworks, selected among the over 10,113 works submitted.

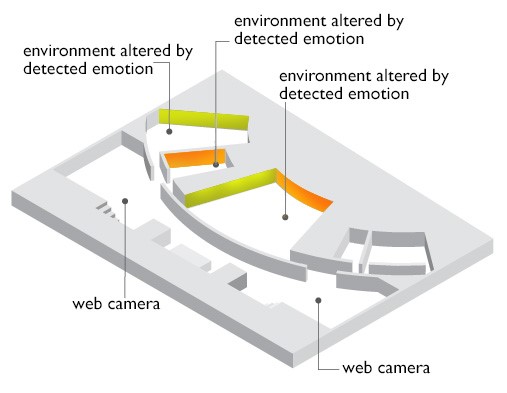

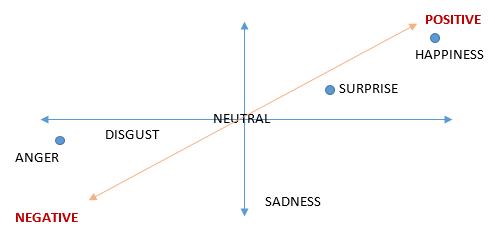

Building on software development in facial detection and emotion detection, this installation seeks to explore the possibilty of how art works and interactive experiences can be mediated through the detesction of emotional epxressions in public spaces. Cameras are be strategically situated in a gallery, museum or other public space to detect faces in the field. The image is segmented to detect faces and analysed for emotion experssions detected. The resulting emotion states are stored in a time tracking database. Emotion states are averaged over a short time interval to create a composite of the emotions detected. The state of light patterns is modified based on the composite emotion detection.

The installation, can also use other forms of sensor detection. one method being explored is position tracking of individuals in the space. Considering historical examples, artists have explored the use of projected imagery or light works as a primary medium. These works may fall into one or more genre or may be in-between different genres of art. When looking at examples of installation or environmental art works, the work of Dan Flavin is exemplary in the use of light as a singular imaging Flavin’s work, as he has described it, is created and

experienced in a strict formalist medium. Formalism focuses on the way objects are made and their purely visual aspects. Nevertheless, the works, such as Flavin’s, though in static light alter or inform, audience spatial perception where installed. In our study of the use of interactive elements, we ask, can the viewer’s perception be altered by the shifting of color or imagery based on responses detected from the viewers themselves? In our study of the use of interactive elements, can the viewer’s perception be altered by the shifting of color or imagery based on responses detected?

Further, can the detection of subtle emotional cues alter the qualities of the imagery or installation? More recently, the projection of video or animated imagery on building facades or in public spaces has become a common way to effect viewer engagement. In these types of new media art work experiences, such as the 2011 transformed façade of St. Patrick Cathedral and the New Museum in New York, these altered architectural and public spaces become a “canvas” where images and media content can be viewed outside of the special circumstance of the gallery or museum. Considering possible ways to allow for audience interaction, we know that sensors and vision systems are being used to encourage audience participation. Can subtle emotional cues be used as well?

Collaborators: Pensyl, W. R.; Lily Song Shuli, Zach StanzianoZach, Chris Canal, Allen Tat, Leo Acevedo, Sean Pendergast, Shane Treadway, and Paul Rhee

2. Messom, C., Barczak, A., Fast and Efficient Rotated Haar-like Features us-ing Rotated Integral Images, Int. J. of Intelligent Systems Technologies and Applications, 2009 Vol.7, No.1, pp.40 - 57

3. Alpers, G.W., Happy Mouth and Sad Eyes: Scanning Facial Expressions, American Psychological Association, Emotion. Aug 11, 2011 (4):860-5. doi: 10.1037/a0022758

4. Viola, P., & Jones, M. (2001). Robust real-time object detection. Paper presented at the Second International Workshop on Theories of Visual Modelling Learning, Computing, and Sampling

5. Bradski, G. and Kaehler, A., (2008). Learning OpenCV. OReilly.

6. Burges, C. J.C., (1998) A Tutorial on Support Vector Machines for Pattern Recognition. Data Mining and Knowledge Discovery 2, 121-167

7. https://www.nist.gov/itl/products-and-services/color-feret-database

8. Wiskott, L.; Fellous, J.-M.; Kuiger, N.; von der Malsburg, C. (1997) Face recognition by elastic bunch graph matching, Pattern Analysis and Machine Intelligence, IEEE Transactions on Machine Intelligence, Volume: 19 Issue:7 Pages 775 - 779

9. Bronstein, A. M.; Bronstein, M. M., and Kimmel, R. (2005). "Three-dimensional face recognition". International Journal of Computer Vision (IJCV) 64 (1): 5–30

10. Ekman, P., (1999), "Basic Emotions", in Dalgleish, T; Power, M, Handbook of Cognition and Emotion, Sussex, UK: John Wiley & Sons, http://www.paulekman.com/wp-content/uploads/2009/02/Basic-Emotions.pdf

11. https://chinati.org/collection

/danflavin.php

12. http://www.newmuseum.org/

ideascity/view/flash-light-mulberry-street-installations.