Everyman, The Ultimate Commodity v2.0 - Augmented Reality in Theatre

Everyman, The Ultimate Commodity v2.0 was staged inthe 2007 Fringe Theatre Festival in Toronto, ON, CA.

The production is noted as one of the first use of mixed reality in theatrical production by

HowlRound, Theatre Commons.

There's something funny in the water and your body (or bits of it) may be up for sale! Set in a sinister alternate future Singapore, The Ultimate Comodity, is a play by Daniel Jernigan and based on a dystopic short story by Singapore author, Gopal Barathaman. The work uses vision and sensor fusion technology to combine live theatre and augmented reality into a new kind of narrative.

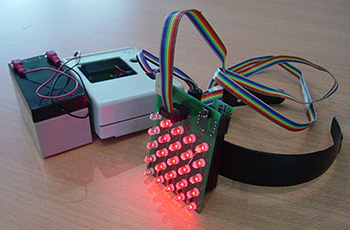

This work uses a hybrid tracking approach – position tracking an active marker while at the same time tracking the orientation of the observer with the use of inertial sensors. The active marker is made up of an single color light-emitting diode(LED) mounted on the actor’s head-mount. Each actor wear a different color pad, allowing the system to distinguish each independently. The system detects three LED pads, using high-end Dragonfly cameras as vision tracking devices.

This work uses a hybrid tracking approach – position tracking an active marker while at the same time tracking the orientation of the observer with the use of inertial sensors. The active marker is made up of an single color light-emitting diode(LED) mounted on the actor’s head-mount.

Each actor wear a different color pad, allowing the system to distinguish each independently.The system detects three LED pads, using high-end Dragonfly cameras as vision tracking devices. An inertial sensor with 6DOF is attached to the head mount to capture orientation and rotation actors heads. The project employed ther use of real-time 3D rendering and animation of 3D virtual heads using lip-synch for the speech of the characters.

The “cloned” or “confused” identities among the characters in the play is implemented by “replacing” the head of one actor with another’s. Realistic 3D head models are rendered in real time onto the actors on the screen. The positioning of the model depends on the tracked location of the LEDs.

Pre-recorded voice tracks were then embedded in the live video stream (synchronized with the script and action) and a lip-sync technique was used to animate the 3D head’s lip movement. Each model was constructed with a sequence of “visemes” that are interpolated (morphed) to synchronise with the voice track. This makes the model “talk” in real time and in sync with the other actors on stage.

“Everyman, the Ultimate Commodity,”

Starring Gerald Chew, Debra Teng and Sara Yang.

Stageplay adapted by Daniel Jernigan from the short story by Gopal Barathaman.

Artisitic/Technical Direction by Russell Pensyl, Dramatic Direction by Gerald Chew

Electrical and Software Engineering by Lee Shang Ping and Ta Huynh Duy Nguyen.

2. MXRToolkit. http://sourceforge.net/projects/mxrtoolkit F. Sparacino, C. Wren, G. Davenport, A. Pentland: Augmented Performance in Dance and Theatre. In International Dance and Technology, ASU, Tempe, Arizona. (1999)

3. T.H.D. Nguyen, T.C.T. Qui, K. Xu, A.D. Cheok, S.L. Teo, Z.Y. Zhou, A. Allawaarachchi, S.P. Lee, W. Liu, H.S. Teo, L.N. Thang, Y. Li, H. Kato. Real Time 3D Human Capture System for Mixed-Reality Art and Entertainment. IEEE Transaction on Visualization and Computer Graphics (TVCG) 11(6) (2005) 706-721

4. S. You, U. Neumann, R. Azuma: Hybrid Inertial and Vision Tracking for Augmented Reality Registration. In Proceedings of the IEEE Virtual Reality. Washington, DC. (1999).

5. XSens: MTx 3DOF Orientation Tracker.http://www.xsens.com/Static/Documents/UserUpload/dl_42_leaflet_mtx.pdf Camera Calibration Toolbox for Matlab. http://www.vision.caltech.edu/bouguetj/calib_doc/

6. Y. Baillot, S.J. Julier: A Tracker Alignment Framework for Augmented Reality. In Proceedings of 2nd IEEE and ACM International Symposium of Mixed and Augmented Reality. (2003)

7. G. Klein, D. Murray: Parallel Tracking and Mapping for Small AR Workspaces. In Proceedings of 6th IEEE and ACM International Symposium on Mixed and Augmented Reality (ISMAR’07) (2007)

8. The Ultimate Comodity, Gopal Baratham, Kirpal Singh; Collected short stories, Marshall Cavendish International (Asia) Pte Ltd; 2nd ed. edition (October 15, 2014) ASIN : B00RKO89NY